AI needs so much computing power that AMD CEO Lisa Su put it in terms of a unit most people have never heard of: the yottaflop.

Su said in her keynote at CES 2026 on Tuesday that the world would need more than “10 yottaflops” of compute — a measure of how fast a computer is — over the next five years to keep up with AI’s growth.

“How many of you know what a yottaflop is?” Su asked the audience. “Raise your hand, please,” she added, before quickly explaining the term herself when no one appeared to raise their hand.

“A yottaflop is a one followed by 24 zeros. So 10 yottaflop flops is 10,000 times more compute than we had in 2022,” she said.

In computing, a flop is a single basic math calculation. A computer doing 1 billion calculations per second is equal to a gigaflop. A yottaflop is equivalent to a computer performing one septillion calculations per second.

In theory, scientists say 10 yottaflops would be enough computing power to run complex, atom-level simulations for entire planets.

In 2022, global AI compute stood at about one zettaflop — a one followed by 21 zeros. By 2025, Su said, that figure had already surged to more than 100 zettaflops.

“There’s just never, ever been anything like this in the history of computing,” she said at the Las Vegas conference.

Su’s 10 yottaflop prediction is about 5.6 million times faster than the most powerful supercomputer today — the US Department of Energy’s El Capitan.

However, powering today’s AI compute is already putting a strain on the US power grid. The build-out of energy infrastructure would be a big bottleneck in scaling up AI compute power.

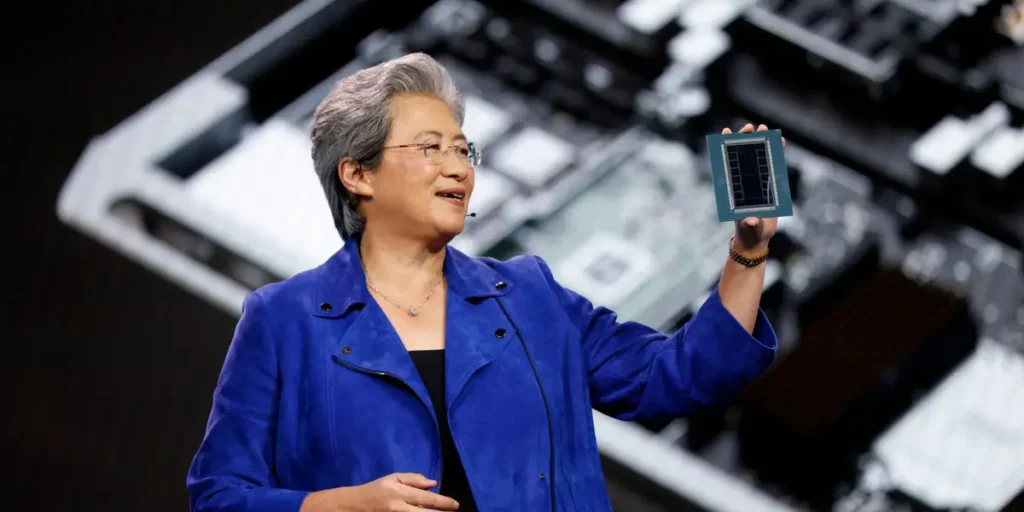

During the keynote, Su also used the stage to unveil AMD’s next generation of AI chips, including its MI455 GPU, as the company pushes deeper into supplying data-center hardware for customers such as OpenAI.